System Design Study: 40 Million reads per second using Integrated Cache by Uber

This article deep dives into the recent blog: How Uber Serves Over 40 Million Reads Per Second from Online Storage Using an Integrated Cache

Docstore Background

Docstore is Uber's in-house distributed database built on MySQL storing tens of Petabytes of data and tens of millions of requests/second.

Across Uber, microservices across all business verticals are using Docstore for persisting their data. The most common ask for all the services is to get latency as low as possible, high performance, and scalability from the database while the services continue to generate higher workloads on the database.

Docstore Architecture

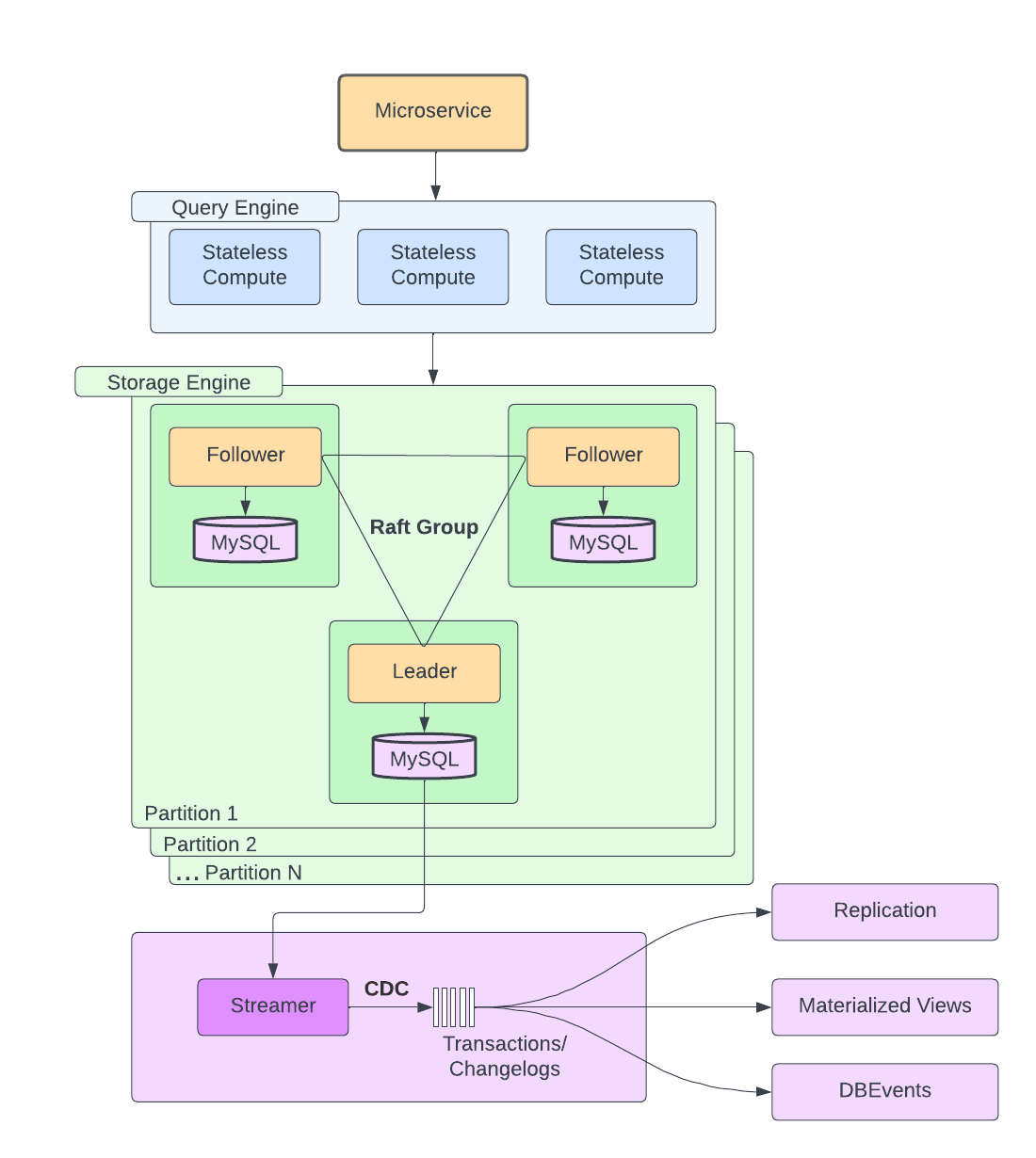

Docstore has mainly three layers:

a stateless query engine layer

a stateful storage engine layer

a control plane

The query engine layer is mainly responsible for query planning, routing, node health monitoring, request parsing, validation, etc.

The storage engine has multiple partitions and each partition contains a shard of whole data. Each partition has 1 MySQL Leader node and 2 MySQL Follower nodes. The storage engine has multiple responsibilities like consensus among nodes in a partition via the Raft algorithm, replication, transactions, concurrency control, and load management.

The control pane is a component of Docstore that processes change logs and is out of scope for this blog.

Challenges

At any company, the services that are powering the backend want to use a database that is backed by disk-based storage to persist their data so that it can be retrieved later. At Uber, microservices were already using Docstore comfortably until one day came when one of the clients wanted a much higher read-throughput than any of the existing clients.

We would probably think this way: we need high read throughput, so let's scale and increase the number of instances of the underlying database. Well, here comes the interesting part.

As per the blog, scaling Docstore in the above scenario would have been very costly and would have required some serious operational and scaling challenges. Here are some challenges faced when services demand low latency reads:

Limited performance: For every database, no matter how much you optimize on queries and the way you model the data for better performance, there's only a certain threshold of performance you can out of the database.

Horizontal & Vertical Scaling: Upgrading to better hosts(vertical scaling) with higher performance has a limitation where the database engine can become a bottleneck. Splitting data across multiple shards(horizontal scaling) leads to more operational complexity as the data volume grows.

Cost: Horizontal and vertical scaling leads to more cost. Hence, these are kind of temporary solutions if you're going to face an unprecedented amount of traffic for your database.

Well then, what can we do next to solve this problem of a customer who has an unprecedented amount of read requests coming in?

Enters the solution: Caching

Caching

Any company, in general, uses caching inside their microservices to serve a high number of reads coming into the system. Uber uses Redis as a distributed caching solution. The most common approach for serving a high number of reads is the cache-aside strategy. In this strategy, we perform reads first through the cache. If it's cache-hit, then return a response else if it's cache-miss, fetch the data from the database and then cache it in the Redis for optimizing future reads.

But, this caching implementation has the following challenges at Uber:

Each team maintains its own caching implementation (which is essentially duplicate code) and also maintains its own set of cache invalidation logic.

In the case of region failover, each service would either use replication to serve reads at the same latency or they would suffer high latencies while the cache is warming up in other regions.

So, we saw the problem, what if we could use one common cache solution as a service provided by the Docstore?

CacheFront

Uber decided to build an integrated caching solution for all teams at Uber called CacheFront for Docstore with the following high-level goals:

Minimize the need for horizontal/vertical scaling to support low latency reads for their clients.

Improve overall P50 and P99 read latencies when there is a lot of traffic increase suddenly.

Centralize the caching solution and remove existing (or future to be implemented) solutions within each team. It helps developers focus more on product and business use cases and shifts Redis's responsibility towards the Docstore team(that deals with databases).

The new cache integration should be easy enough to use using the existing Docstore client.

Caching should be separate from Docstore's partitioning scheme to avoid common due to hot keys, shards, or partitions. This allows scaling of the caching layer independently.

CacheFront Design

The Docstore team realized that more than 50% of the traffic from Uber teams was coming for the point reads. Point reads are basically read requests that are looking for only a single row using its primary key and partition key. To begin with, the use case for cache integration was decided as point reads.

Since Docstore has a query engine that is responsible for serving reads and writes to the clients, it seemed like a perfect place to integrate the cache so that the cache is decoupled from a disk-based storage engine and both of them can scale independently. Thus, the query engine layer implemented an interface to Redis with a provision to cache invalidation.

They came with the following design:

Integrated caching is an opt-in feature at Uber. If services require strong consistency, they can use the Docstore for querying data and by-pass the cache. For other services interested in caching, they can do it on a per-database, table, or even per-request basis.

CacheFront uses the cache-aside strategy to implement the cached reads. The key thing to note regarding cache invalidation in CacheFront design is that it uses a default 5-minute TTL to expire the keys. The TTL can be lower than 5 minutes as well but it does not significantly improve the consistency across multiple nodes in the MySQL cluster.

Problem: Conditional Update

Docstore supports conditional update meaning at the time of a write request, one or more rows could be affected because of a given filter condition. For example: we want to change the holiday schedule for all restaurants in a given region. Since the caching layer can't determine which rows (or restaurants) would be affected by the conditional update, the changes need to be reflected first in the storage engine(database) and then in the query engine(cache).

Solution: Leveraging Change Data Capture for Cache Invalidation

Uber uses a streaming service called Flux which reads the latest MySQL bin log events in the storage engine layer and publishes them to a list of consumers which is responsible for making the edits in Redis.

The final reads and writes including cache invalidation look like this:

Since there are reads and writes happening at the same time, there are two types of inconsistencies in this:

Writes Inconsistency

Since reads and writes are both happening at the same time through the query engine, it might be possible that CDC via Flux overwrites a newer change coming in via the database into the Redis. Uber tackles this problem using a convenient way - the version number(the latest timestamp) is maintained at the row level to only allow those changes in the Redis cache that are newer than the current row timestamp. This allows stale writes to be ignored while writing in the Redis cache.

Reads Inconsistency

While capturing data change by using Flux service is better than using Redis with TTLs for expired keys, it's still eventual consistency and may provide inconsistent results to the clients for a few minutes. Thus, Uber provides a dedicated endpoint to the Docstore clients for stronger consistency guarantees. This endpoint explicitly invalidates the cached rows that have been updated in the database, thus providing stronger consistency for future read requests.

The above efforts show great dedication by the Docstore team for providing a much higher read-throughput to their clients. This is like setting a foundational work that will help other teams in the future. Great job!

Well, that was pretty much for Docstore design by Uber. There’s still much more to this article for how they launched the Integrated Cache in Docstore. Please comment below and I will cover the next part of the rollout plan and some common issues faced by the team.

That’s it, folks for this edition of the newsletter. Please consider liking and sharing with your friends as it motivates me to bring you good content for free. If you think I am doing a decent job, share this article in a nice summary with your network. Connect with me on Linkedin or Twitter for more technical posts in the future!

Book exclusive 1:1 with me here.

Resources

How Uber serves over 40M reads using a Cache

Database Storage Engine by Wikipedia

thanks Vivek for simplifying the uber blog